Resources

AI Security: Customer Needs And Opportunities

By Sekhar Sarukkai - Cybersecurity@UC Berkeley

September 28, 2024 6 Minute Read

All signs in the industry point towards rampant growth in the innovation and adoption of AI tools and services. Investments in artificial intelligence (AI) startups surged to $24 billion from April to June, more than doubling from the previous quarter, according to data from Crunchbase. 75% of founders in the latest Y Combinator cohort are working on AI startups and almost all tech vendors are executing on revamping their strategy to be AI centric.

In a recent survey, PWC found that more than 50% of companies have already adopted genAI and 73% of US companies have already adopted AI in at least some areas of their business functions. Just this month, OpenAI also announced its 1 millionth enterprise seat – less than a year since it released the enterprise product.

With all this investment and innovation comes the downside of the dangers of AI, and perspectives on the biggest security, safety, privacy, and governance concerns that will keep enterprises from using AI in production. This blog, the first in a series, captures my perspective on the key security challenges facing that AI stack that I have gathered over the last year.

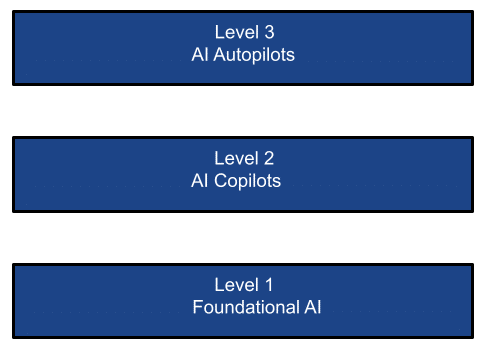

The Simplified AI stack

The proliferation of AI technologies can be viewed in the following three layers:

LAYER 1: Foundational AI

Foundation models are a form of generative artificial intelligence. They generate output from human language based prompts. Models are based on complex neural networks including generative adversarial networks, transformers, and variational encoders. The pace of innovation in foundational AI has been breathtaking with game-changing releases, most recently with GPT-4o with its multimodal model. Big money has pumped in big money at this level, in essence picking the winners in this core AI infrastructure layer.

There are two types of infrastructure in this layer:

- Foundation models dominated by the likes of OpenAI, Google Gemini, Mistral, Grok, Llama and Anthropic. These models (open-source or close-source) are trained on a humongous corpus of data (public or private). Models can be used for inference as a SaaS (such as ChatGPT), PaaS (such as AWS Bedrock, Azure/Google AI platforms) or deployed in private instances. These foundation model companies alone account for almost 50% of Nvidia’s AI chip business.

- Custom models thousands of which can be found in a marketplace such as Hugging Face. These models are trained on specialized data sets (public or private) for targeted use cases or verticals. They can also be consumed as SaaS or PaaS services, or by deploying them in private instances. In addition, enterprises can choose to create and deploy their own fine-tuned models deployed in their private data centers, private Cloud or in Hybrid environments (ex. H2O.ai). Recent quarterly reports from Microsoft, for example, show that while the general public cloud growth without considering AI PaaS is only modest, the fastest growing part of the public cloud business is AI PaaS.

LLM/SLM Use Cases:

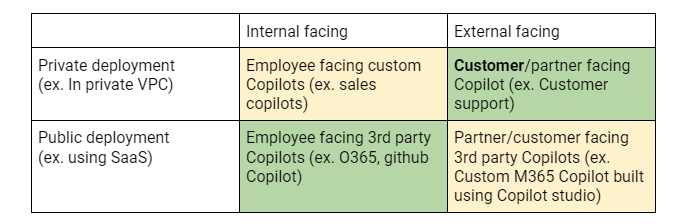

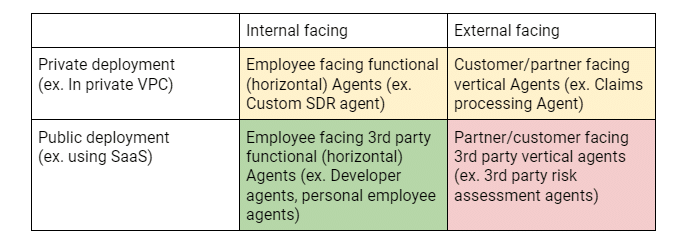

A summary of customer use cases in each layer can be seen from the perspective of two dimensions: X-axis that identifies if an AI effort/application is targeted at internal employees or external customers/partners. The Y-axis identifies if the deployment of the AI application is in a private instance (say on Google/MS AI or AWS Bedrock) or if it is a 3rd party SaaS Service (such as ChatGPT). This matrix helps clarify security risks, buyer/adopter motion, and key requirements.

The above 2×2 matrix shows the different use cases driving adoption of LLMs in enterprises and the customer deployment priorities. Green cells indicate current investments/POCs. Yellow cells indicate active developments, and red cells indicate potential future investments.

Layer 2: The AI Co-pilots

If mindshare is the measure of success at the foundational AI layer, revenue is undoubtedly the yardstick in this layer. Just like in the internet boom, the internet infrastructure vendors (think AT&Ts) were critical but not the biggest business beneficiaries of the internet revolution. It was the applications that used this internet infrastructure to create new and interesting applications such as Google, Uber, and Meta that were the bigger winners. Similarly, in the AI era, big $$ applications are already emerging in the form of AI co-pilots: as Satya Nadela the CEO of Microsoft proclaimed at Ignite 2023 that they were a co-pilot company, envisioning a future where everything and everyone will have a co-pilot. Studies have shown potential 30+% gain in developer productivity, or 50+% gain in insurance claims processing productivity already. No wonder Nadela recently stated that Copilot is Microsoft’s fastest growing M365 suite product. It has already become a runaway leader in enterprise co-pilot deployments. Skyhigh’s recent data confirms this with a 5000+% increase in use of M365 Copilot in the last 6 months!

More importantly it is the dominant genAI technology in use at large enterprises even though M365 Copilot penetration is only at 1% of the M365 business – leaving huge headroom for growth. The impact on enterprises should not be underestimated – the amount of enterprise data being indexed, as well as the data shared with M365 Copilot is unprecedented. Almost all enterprises block ChatGPT and almost all of them also allow O365 Co-pilot which in turn uses Azure AI service (primarily OpenAI). The M365 add-on per-seat pricing for the Copilot is shaping up to be the model for all SaaS vendors to roll out their own co-pilots, generating a lucrative revenue stream.

Co-pilot Use Cases:

The above 2×2 matrix shows the different use cases driving adoption of Copilots in enterprises and the customer deployment priorities. Green cells indicate current investments/POCs. Yellow cells indicate active developments, and red cells indicate potential future investments.

Layer 3: The AI Auto-pilots

And then there is the AI auto-pilots layer – agentic systems that perform tasks with little to no human intervention. This promises to be the layer that will break open the SI/consulting business that is now manual, expensive and is by some estimates almost 8-10 times the size of the SaaS/software business. While early, these agentic systems (systems designed to act as autonomous agents) promise to excel in task analysis, task breakdown, and autonomous task execution in response to goals set [or implied] by users. This can potentially eliminate the human in the loop. There is a lot of VC funding currently targeting this space. A lot of the 75% of the startups in the recent YC cohort are AI startups that fall in this layer. Just last week, Salesforce announced their Agentforce to boost employee productivity and Microsoft introduced Copilot Agents initially targeted at SMBs which can enable automation of business processes, as well as extension of their Copilot Studio to support custom Agents.

There are autonomous agents for a personal assistant (ex. Multion.ai), support agents (ex. Ada.cx, intercom.com), developers (ex. Cognition.ai (devin), Cursor.com, Replit.com), sales team (ex. Apollo.io, accountstory.com), security operations (ex. AirMDR.com, Prophetsecurity.ai, AgamottoSecurity.com, torq.io), UX researcher (ex. Altis.io, Maze.co), UX designer (ex. DesignPro.ai), System Integrator (ex. TechStack.management), project manager (ex. Clickup.com), finance back office employees (ex. Prajna.ai), or even an universal AI employee (ex. ema.co). Some studies indicate that such agentic systems that automate actions could result in 1.5T$ of additional software spending, positioning these new breed of companies to be the next vanguard of service-as-software companies that could dominate the IT landscape.

Auto-pilot Use Cases:

The above 2×2 matrix shows the different use cases driving adoption of Agents in enterprises and the customer deployment priorities. Green cells indicate current investments/POCs. Yellow cells indicate active developments, and red cells indicate potential future investments.

AI Security Needs and Opportunities

Not surprisingly, ever since the unveiling of ChatGPT just 2 years ago, new hitherto unknown security, privacy and governance lingo has become mainstream such as: prompt engineering, jailbreaking, hallucinations, data poisoning, etc. The industry has been quick to wrap its arms around these new issues and has resulted in some metrics used to measure the proclivity of various LLMs to exhibit these characteristics. The Open Worldwide Application Security Project (OWASP) has done good work in identifying the top 10 LLM risks that are comprehensive and are actively updated. In addition, Enkrypt AI has done a good job of creating and maintaining a comprehensive LLM safety leaderboard that is a handy tool to benchmark the safety scores of leading LLMs across four dimensions of bias, toxicity, jailbreak and malware. The same analysis can also be done for custom LLMs via their red-teaming.

However, given the full context of the AI stack, taking a narrow view of AI security is naive. Each layer requires distinct issues to be considered in order to address the unique challenges imposed by potentially different buyers. For example, red-teaming to identify issues with models being deployed by customers is likely to be of interest to the application team whereas data leakage via co-pilots or data used to fine-tune models could be an information security or CDO concern.

In the next few blogs, I will dive deeper into the security risks and proposed solutions in each of the three described layers.

Other Blogs In This Series:

- Part 1 – AI Security: Customer Needs And Opportunities

- Part 2 – Foundational AI: A Critical Layer with Security Challenges

- Part 3 – Security Risks and Challenges with AI Copilots

Related Content

From the Frontlines: What Fortune 100 CISOs Are Saying About the Future of Cybersecurity

Trending Blogs

From the Frontlines: What Fortune 100 CISOs Are Saying About the Future of Cybersecurity

Thyaga Vasudevan April 3, 2025

Simplifying DPDPA for Indian Enterprises with Skyhigh Security

Sarang Warudkar and Hari Prasad Mariswamy March 13, 2025

Navigating DORA and Key Requirements for Organizations

Sarang Warudkar March 4, 2025

The Hidden Risks of AI Chatbots: When Convenience Comes at a Cost – Skyhigh Security Intelligence Digest

Rodman Ramezanian February 24, 2025

The Evolution of Data Security: From Traditional DLP to DSPM

Hari Prasad Mariswamy February 20, 2025