Resources

Adopt ChatGPT Without Putting Your Business-Critical Data at Risk

By Tony Frum - Product Specialist, Skyhigh Security

June 6, 2023 6 Minute Read

Having grown up knowing how to use a rotary telephone, I’ve seen a fair amount of technological advancement in my years. However, I think it might pale in comparison to what my children will see in their lifetimes due to the advent of Artificial Intelligence (AI). Experts in the field use over-the-top comparisons like the atomic bomb and the discovery of fire to describe its significance, and there is a decent chance that these won’t be exaggerations.

There is a great deal of consternation surrounding this development with some calling for security measures, government regulations, and even a moratorium on AI research. In the cybersecurity field, there is a great deal of anxiety regarding the use of AI tools such as ChatGPT and their implications to data security. As a maniacally data-focused Security Service Edge (SSE) vendor, we at Skyhigh Security are asked at least on a daily basis about how data loss via ChatGPT can be prevented. Our customers are reading about situations like Samsung’s data loss incident via ChatGPT and wanting to ensure they have the right controls in place.

Despite the novelty of tools like ChatGPT, I have to resist the urge to quote Ecclesiastes saying, “There’s nothing new under the sun.” While the specific risk of an AI model being trained on your data may be a new twist, the logistics of protecting your data from ChatGPT is actually nothing new. This is what we do every day! And, to put it bluntly, if you have the right data security controls in place, then you should already have your bases covered. Let’s briefly review the applicable controls, many of which you probably already have deployed, and how they can help you address this new, but not-so-new, risk.

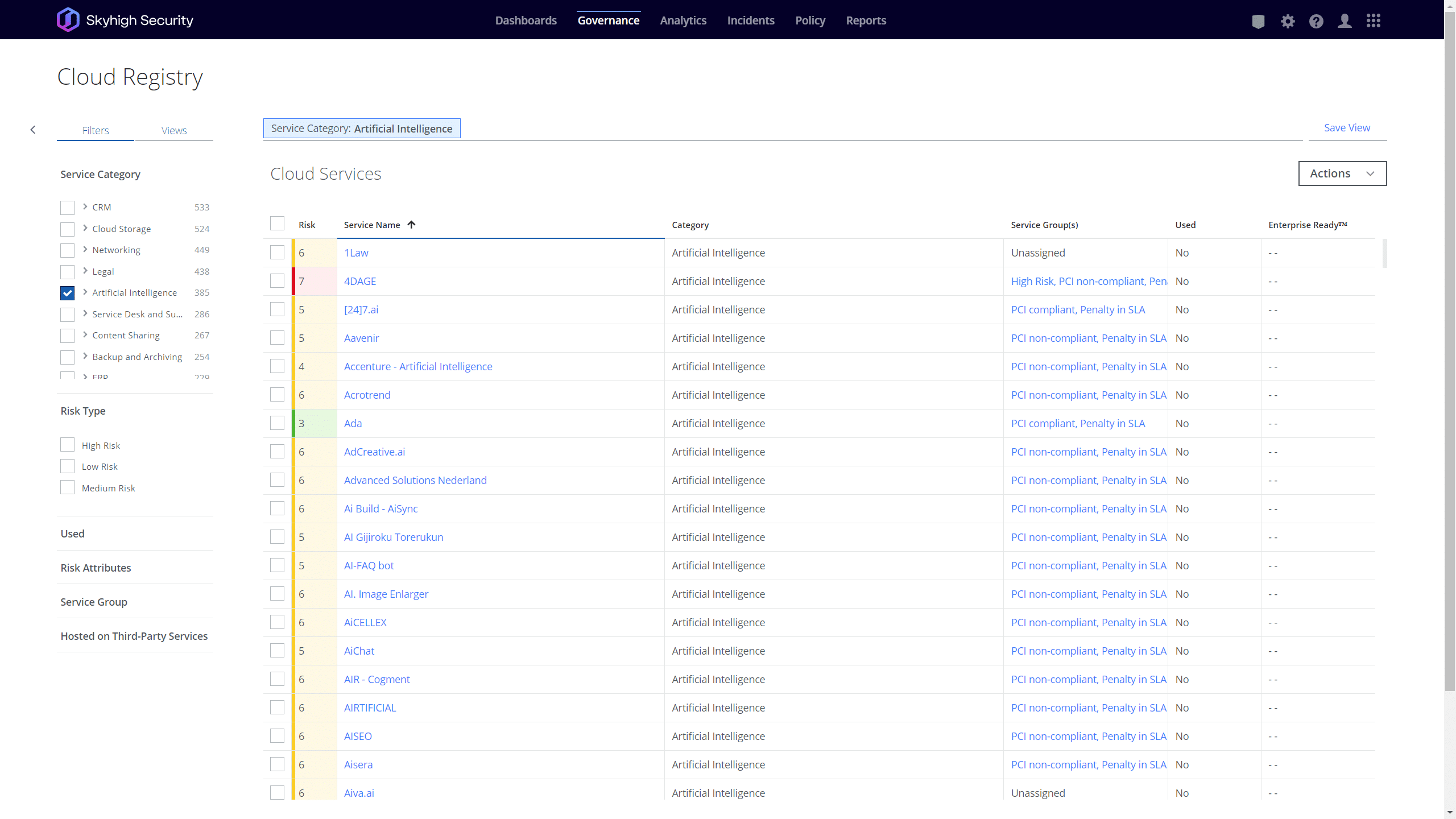

Blocking by Category/App

Many organizations have no appetite for the risks posed by AI chatbots and other generative AI services. For these entities, outright blocking the use of these apps is their preferred strategy. Apple recently joined a growing list of these companies. But, as I’ve told our Secure Web Gateway (SWG) customers for years, “Blocking something is easy. Anyone can do that. Knowing what to block is the hard part.” That being said, any SWG worth its salt today should already be aware of these AI tools and should be able to easily build a policy to block them. At the time of this writing, Skyhigh Security’s cloud registry contains a full risk analysis of over 400 AI applications, and the list is growing daily! Each of these includes over 65 risk attributes and a list of all domains related to the apps. In just a couple of clicks, this rich data can be leveraged in our customers’ web security policies to control these apps. For customers looking to ban the use of AI chatbots, this is the “easy button” for them.

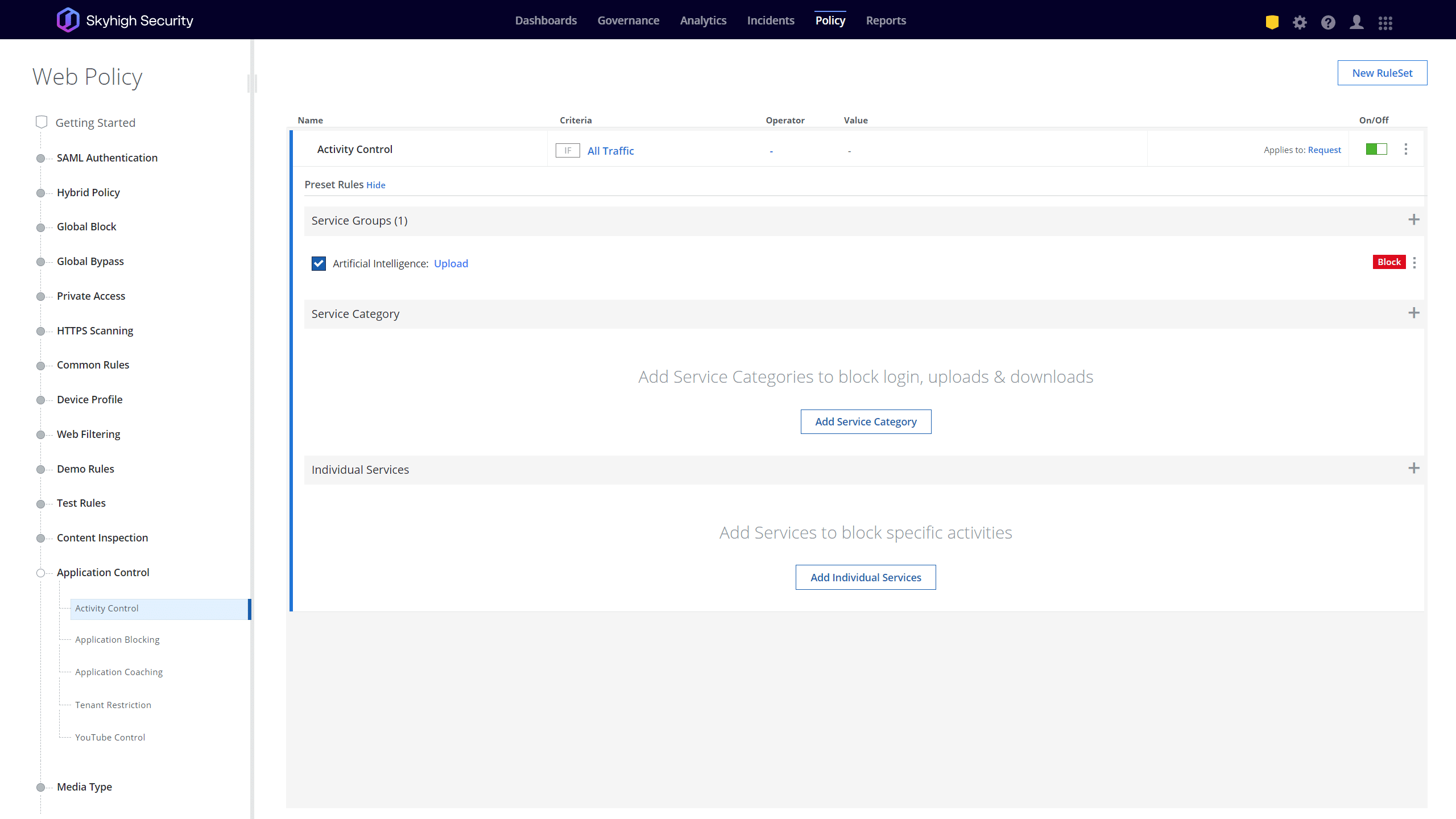

Restricting specific activities

Some organizations see value in permitting the use of AI chatbots but want to ensure their more critical data remains secure. There are several ways to skin this proverbial cat today. One option is to use Activity Control to permit an AI-based application but to prevent certain activities such as uploading files or sending prompts. Skyhigh’s SWG supports activity controls for all 35k+ applications in our registry including the over 400 apps categorized as AI. Again, in just a few clicks you can prevent uploads of any content to these applications in minutes.

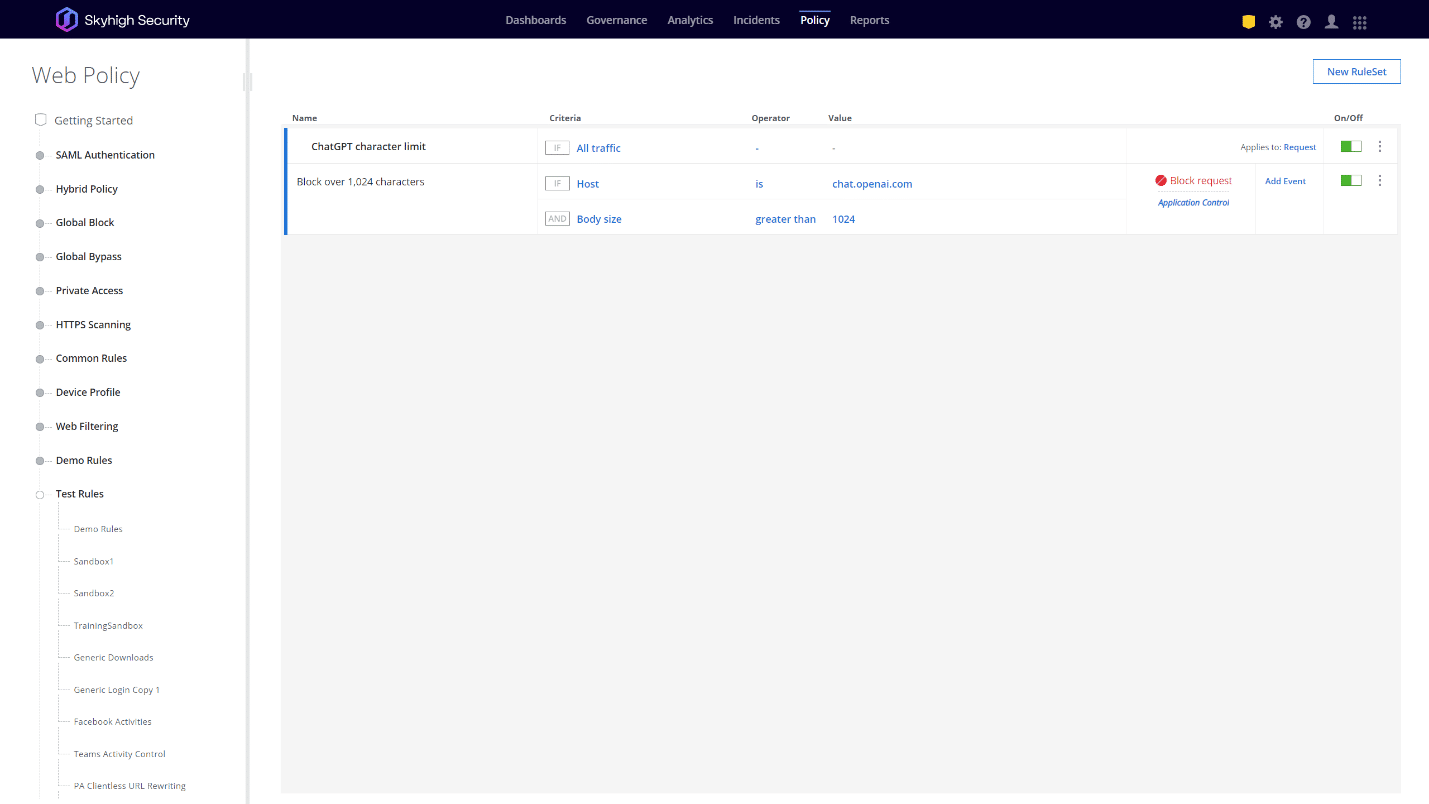

Of course, some applications, such as ChatGPT may be rendered useless by preventing activities such as sending questions. What good is allowing the app if you can’t ask it questions? In these situations, customers may want to take a more nuanced approach. Samsung, for example, initially responded to their data leaks by limiting ChatGPT prompts to 1,024 bytes. Other options may include preventing uploads of certain types of files, limiting access based on geolocation, and more. For many of these options, a highly granular Web policy engine is required. The Skyhigh SWG solution has, without a doubt, the most powerful and granular Web policy engine on the market today making quick work of use cases such as these. For example, with a single, custom rule our customers can implement a policy that if a request to any AI-based platform exceeds 1024 bytes, then block it. So, whatever your preferred approach is, we are likely to enable you to exercise that control with ease.

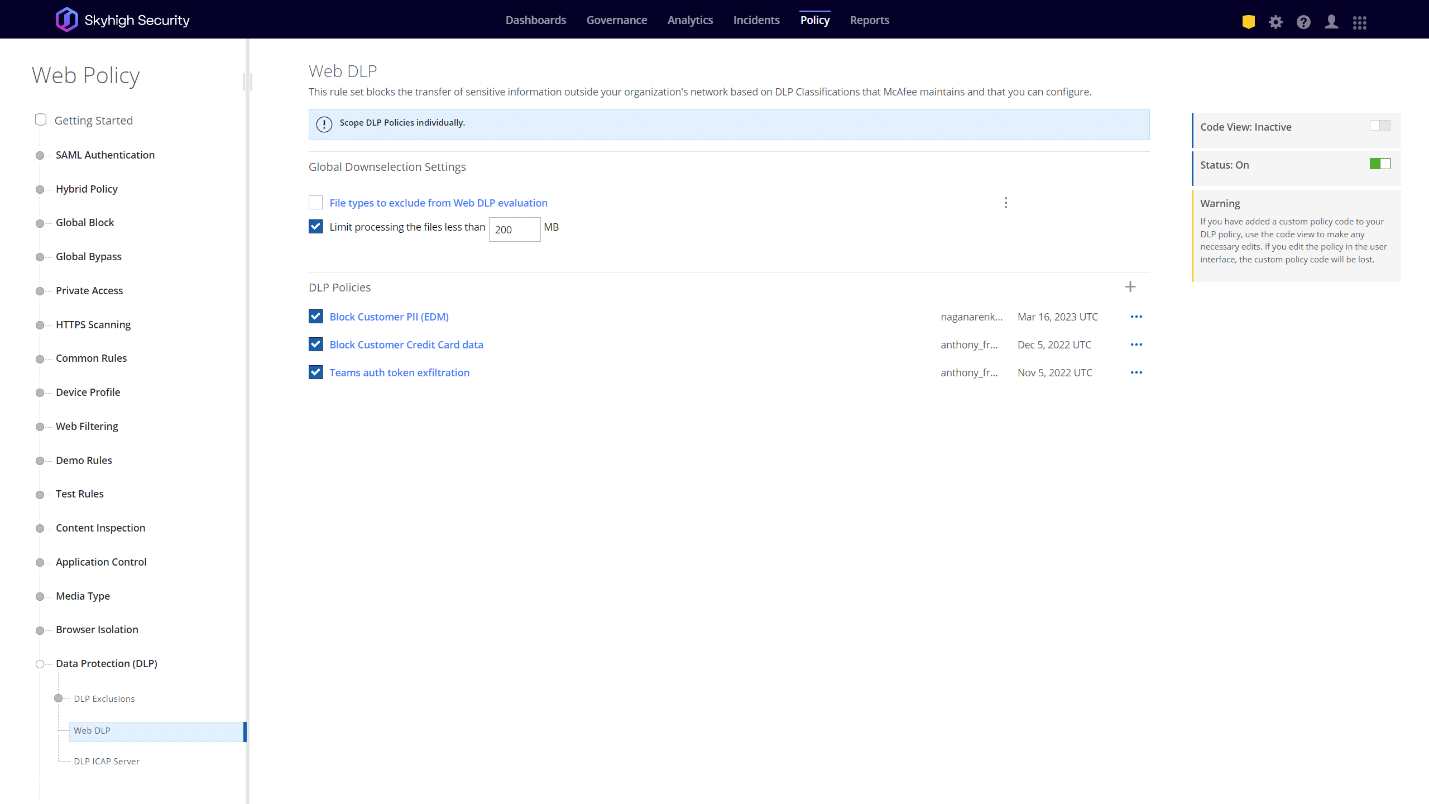

Protecting your business critical data

Personally, I feel that the best approach involves data awareness. In the cloud-based world of today, organizations should already have a good handle on what data they need to protect, and they should apply a policy to prevent that data from being sent to any external application that is not sanctioned and properly secured by the organization. In this regard, ChatGPT is really no different than a personal Dropbox account. Your organization may allow and tolerate the use of personal Dropbox, but they should also be ensuring that their most critical data is not uploaded to this unsanctioned and unsecured application. In the same way, organizations with mature data security programs will likely already have a policy to prevent sensitive data from being exfiltrated to any unsanctioned application which should naturally extend to apps like ChatGPT. Skyhigh Security customers are already ahead of the curve in this area as they are already leveraging a mature and highly advanced Data Loss Prevention (DLP) engine with robust capabilities such as Exact Data Match (EDM), Indexed Data Matching (IDM), Optical Character Recognition (OCR), and more.

One recent development from OpenAI that brings additional hope is a new control to disable ChatGPT conversation histories and training on those conversations. With the simple flip of a switch in the UI, users can indicate that they do not want their data stored, and it should not be used for training ChatGPT. Users will have to manually enable this option, which is disabled by default, but it offers an extra layer of security to prevent the ChatGPT model from being trained on potentially sensitive data. Skyhigh Security has already tested a policy in our SWG solution that forces this new option to be enabled for any managed device going through our proxy regardless of which account the user is logged into.

Sanctioning the service

OpenAI has also announced plans for a ChatGPT Business subscription in the future. With this new subscription option, organizations will be able to create business accounts for their users and centrally manage how their data is handled. This could, potentially, bring several other capabilities of the Skyhigh Security SSE platform into play. The first is what we call “tenant restrictions” which enables you to permit logins only to your sanctioned tenant, or instance, of an application while blocking personal and third-party accounts. This will be critical if organizations want to go the ChatGPT Business route to apply data handling policies across the company. Doing so would be futile if you couldn’t also prevent users from logging in to uncontrolled, personal ChatGPT accounts.

Skyhigh Security’s engineering team has already successfully performed a proof of concept interacting with OpenAI’s APIs to scrutinize files uploaded to the OpenAI platform using the cloud access security broker (CASB) portion of our portfolio. Although the OpenAI APIs do not yet support all use cases, we will continue to look for opportunities to scan for and remediate sensitive data in enterprise tenants in an out-of-band process using our CASB API capabilities.

Similarly, if ChatGPT offers the ability to use single sign-on (SSO) from a third-party identity provider, then Skyhigh Security’s CASB technology will be able to bring value. By plugging into the SAML hand-off process between ChatGPT and a third-party identity provider, the CASB can get inline for any device in the world authenticating to your ChatGPT tenant and perform device access control. In this way, we would be able to restrict access to your organizations ChatGPT so that only trusted devices gain access. It has not been confirmed, as of this writing, that OpenAI plans to offer SSO, but it’s highly likely as this is a common approach for authentication and account management.

To my colleagues at Skyhigh Security, what we’ve just discussed will sound like a brief summary of our daily conversations with customers. This is what we do. AI chatbots, and specifically ChatGPT, may bring a new twist to the risk of data loss, but for data security professionals it’s still the same job requiring the same tools and with the same stakes. Chances are you’re prepared with many of these tools already, but if you find your security controls lacking, give us a chance to show you how we can help. This is right in our wheelhouse because protecting our customers’ data is what we do every single day.

To learn more about how Skyhigh Security can help you contact us today.

Back to BlogsRelated Content

Trending Blogs

Simplifying DPDPA for Indian Enterprises with Skyhigh Security

Sarang Warudkar and Hari Prasad Mariswamy March 13, 2025

Navigating DORA and Key Requirements for Organizations

Sarang Warudkar March 4, 2025

The Hidden Risks of AI Chatbots: When Convenience Comes at a Cost – Skyhigh Security Intelligence Digest

Rodman Ramezanian February 24, 2025

The Evolution of Data Security: From Traditional DLP to DSPM

Hari Prasad Mariswamy February 20, 2025

Cloud Repatriation: Why Enterprises Are Rethinking Their Cloud Strategies

America Garcia February 18, 2025